What is PTVD ?

About PTVD

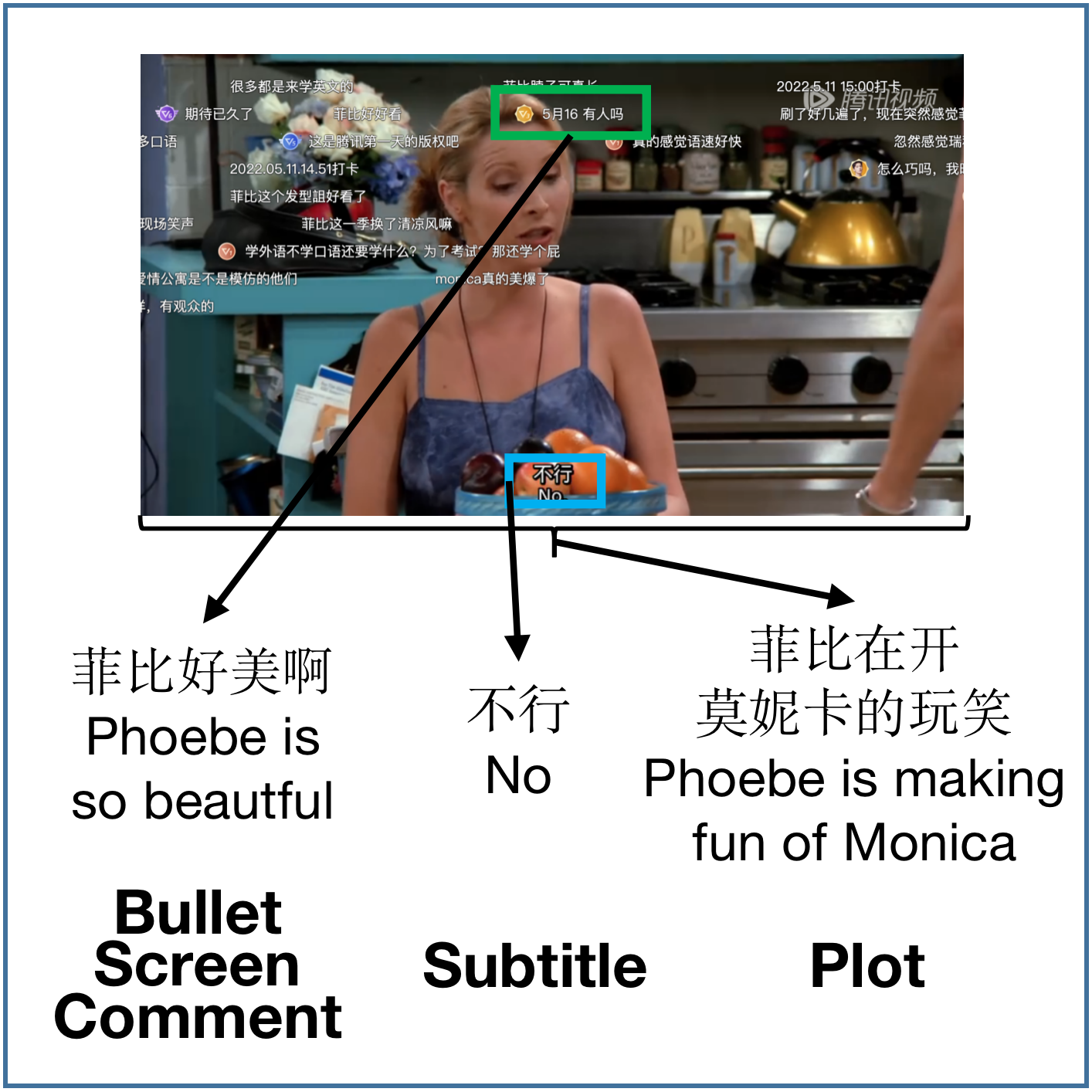

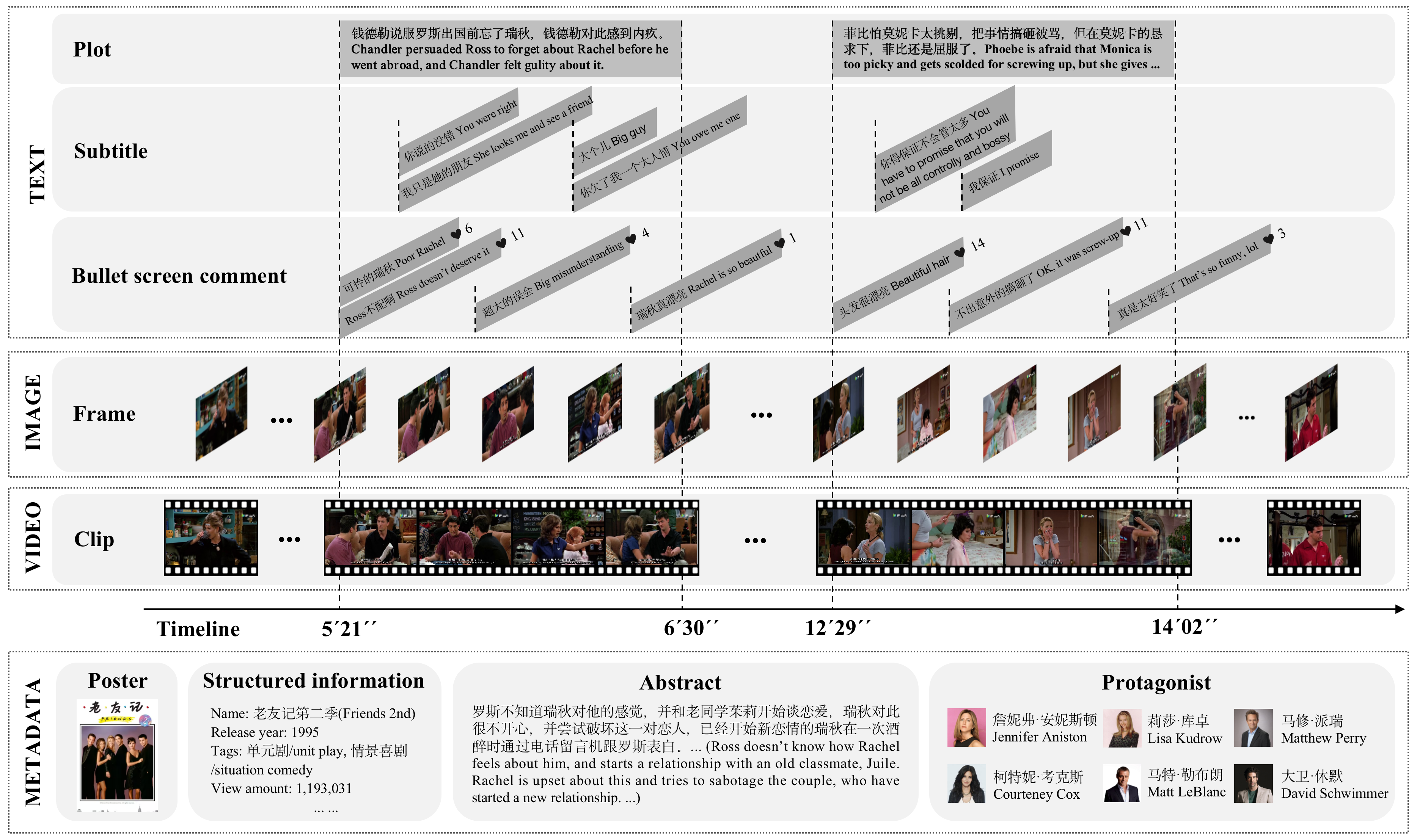

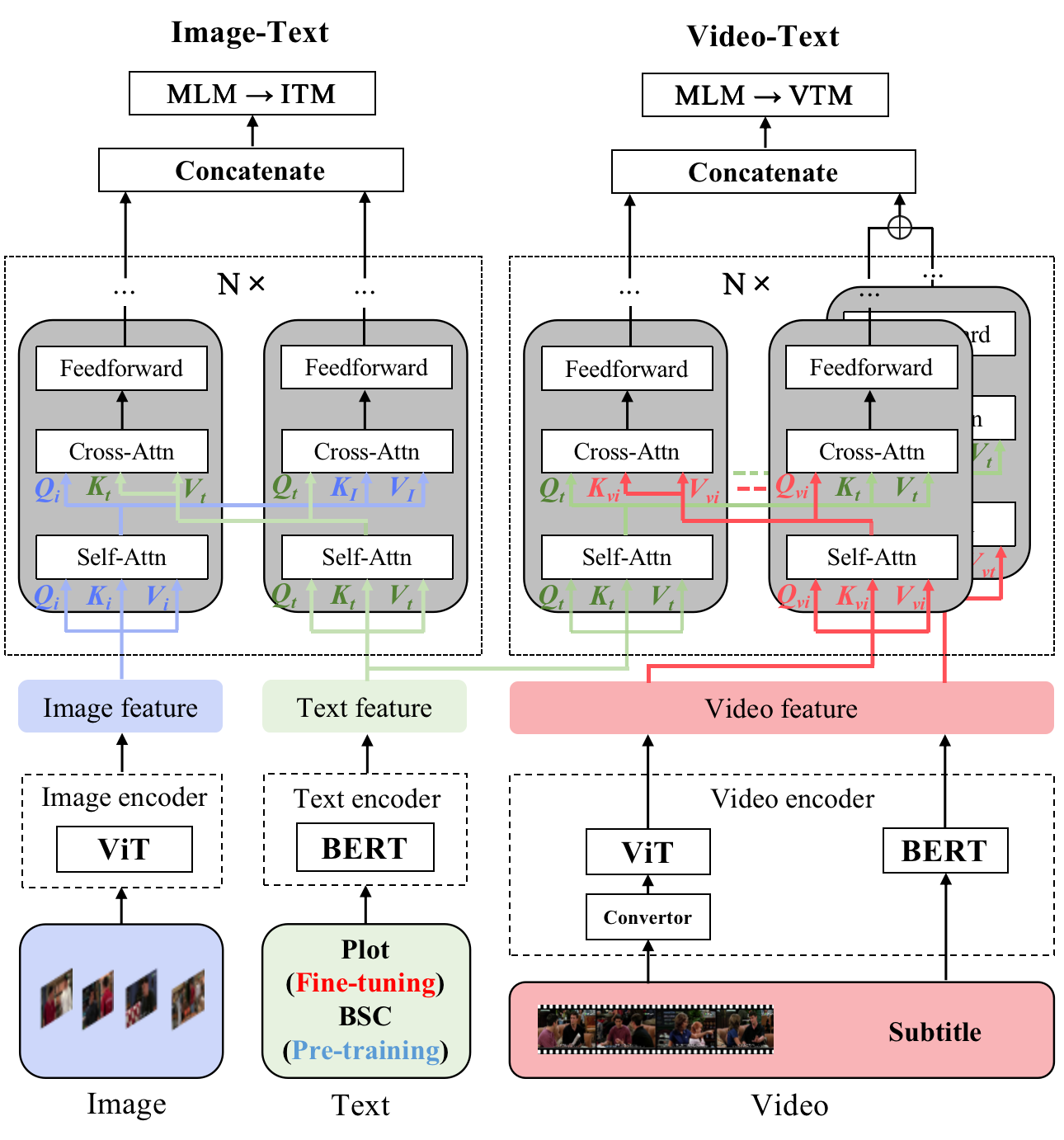

Art forms such as movies and television (TV) dramas are reflections of the real world, which have attracted much attention from the multimodal learning community recently. However, existing corpora in this domain share three limitations: i. annotated in a scene-oriented fashion, they ignore the coherence within plots; ii. their text lacks empathy and seldom mentions situational context; iii. their video clips fail to cover long-form relationship due to short duration. To address these fundamental issues, using 1,106 TV drama episodes and 24,875 informative plot-focused sentences written by professionals, with the help of 449 human annotators, we constructed PTVD, the first plot-oriented multimodal dataset in the cinema domain.